Pursuits: Gaze at Objects in Motion

The main types of eye movements studied for HCI are fixations that occur when we focus on an object and saccades that we use to rapidly shift our attention in the visual field. Fixations are detected when the eyes are relatively still in the head to keep an object in the line of sight. However, if we look at an object that is in motion, our eyes produce smooth pursuit movement, anticipating the object’s path to keep in focus.

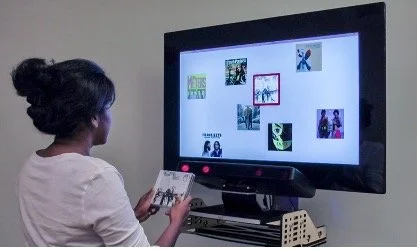

State of the art gaze interaction is based on fixations and dwell time, for pointing and selection of static objects on the interface. Our work on Pursuits broke new ground by developing gaze interfaces that are instead based on motion [1]. When we look at an object that is in motion, our eyes follow it effortlessly with smooth pursuit movement, which is distinct from the saccadic movement that is otherwise observed. Our novel insight was that this enables robust detection of attention and inference of input from the correlation of eye movement and object trajectories [2]. As the technique is based on relative motion, it does not require prior calibration of the eye tracker and supports “walk up and use” gaze input.

We designed Orbits as a new type of graphical user interface element for pursuit-based gaze control. Orbits display circular motion that can be varied in direction, phase and speed to be uniquely selectable by corresponding eye motion. In a landmark work, we showed that orbits can be as small as 0.6º visual angle and still robustly induce matching smooth pursuit eye movement [3]. This enabled development of a first smartwatch interface entirely controllable by gaze alone. The smartwatch takes uncalibrated input from a head-worn eye tracker which it matches against orbital motion on the watches’ face.

The two examples illustrate a design focus on the nature of eye movement, and how to design with it, contrasting a focus on eye tracking and how it can be utilised as input device. Pursuits and Orbits both highlight how naturally and fluidly the eyes interact with motion, which inspires fundamental research on motion-based control in GEMINI.

Pursuits: spontaneous interaction with displays based on smooth pursuit eye movement and moving targets

Mélodie Vidal, Andreas Bulling and Hans Gellersen

UbiComp ’13: ACM Pervasive and Ubiquitous ComputingPursuit calibration: making gaze calibration less tedious and more flexible

Ken Pfeuffer, Mélodie Vidal, Jayson Turner, Andreas Bulling and Hans Gellersen

UIST '13: ACM Symposium on User Interface Software and TechnologyOrbits: Gaze Interaction for Smart Watches using Smooth Pursuit Eye Movements

Augusto Esteves, Eduardo Velloso, Andreas Bulling and Hans Gellersen.

UIST '15: ACM Symposium on User Interface Software and Technology

Attention to objects displayed in motion can be inferred from corresponding smooth pursuit eye movement.

Orbits are widgets that are triggered by gaze pursuits, and enable robust gaze control even for very small devices.