Gaze and Synchronous Gestures

We have a natural ability to synchronise body movement with external motion. In recent work we have developed the idea of using synchronous movement for dynamic control of interactive objects. Objects display distinct motion patterns which users can match with gestures performed in synchrony, to dynamically establish a control relationship. This is compelling for casual interaction, as it does not require any prior calibration or learning of predefined gestures.

The pursuit-based gaze input paradigm we pioneered relies on motion correlation to associate a user’s eye movement with an object in the field of view displaying the corresponding motion. In follow-on research we have generalised motion correlation as an input principle that can be employed with any body movement [1].

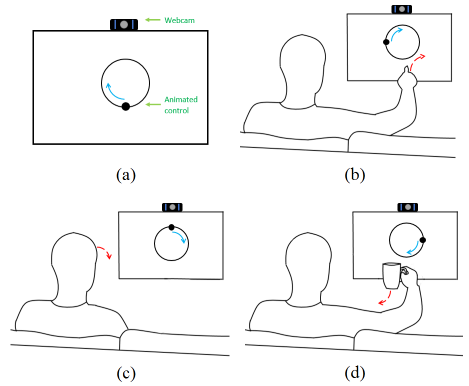

In the TraceMatch system, we demonstrated the principle with on-screen controls that display orbiting motion differentiated by direction, phase and speed. Users can select any of the controls by moving in synchrony, and synchronous input is detected by matching “moving pixels” in front of the screen. This provides a high degree of abstraction and flexibility - control can be gained dynamically by any user in front of the screen, with movement that can be produced with hand, head and or other body part, and without needing to first put any object down they might be holding [2]. We further extended the concept in the MatchPoint system, using motion correlation to infer a spatial mapping such that the users can bind an object through synchronous movement and then manipulate it straightaway [3].

In GEMINI we aim to study how synchronous gestures are guided by the eyes, to develop multimodal techniques for spontaneous, fast and precise gestural interaction.

Motion Correlation: Selecting Objects by Matching their Movement

Eduardo Velloso, Marcus Carter, Joshua Newn, Augusto Esteves, Christopher Clarke and Hans Gellersen

ACM Transactions Computer-Human Interaction (TOCHI) 24, 3, 2017Remote Control by Body Movement in Synchrony with Orbiting Widgets: an Evaluation of TraceMatch

Christopher Clarke, Alessio Bellino, Augusto Esteves, and Hans Gellersen

PACM Interactive, Mobile, Wearable and Ubiquitous Technologies 1, 3 (2017)MatchPoint: Spontaneous Spatial Coupling of Body Movement for Touchless Pointing

Christopher Clarke and Hans Gellersen

UIST '17: ACM Symposium on User Interface Software and Technology