Multi-user Gaze-based Interaction Techniques on Collaborative Touchscreens

Ken Pfeuffer, Jason Alexander and Hans Gellersen

ETRA '21: ACM Symposium on Eye Tracking Research and Applications

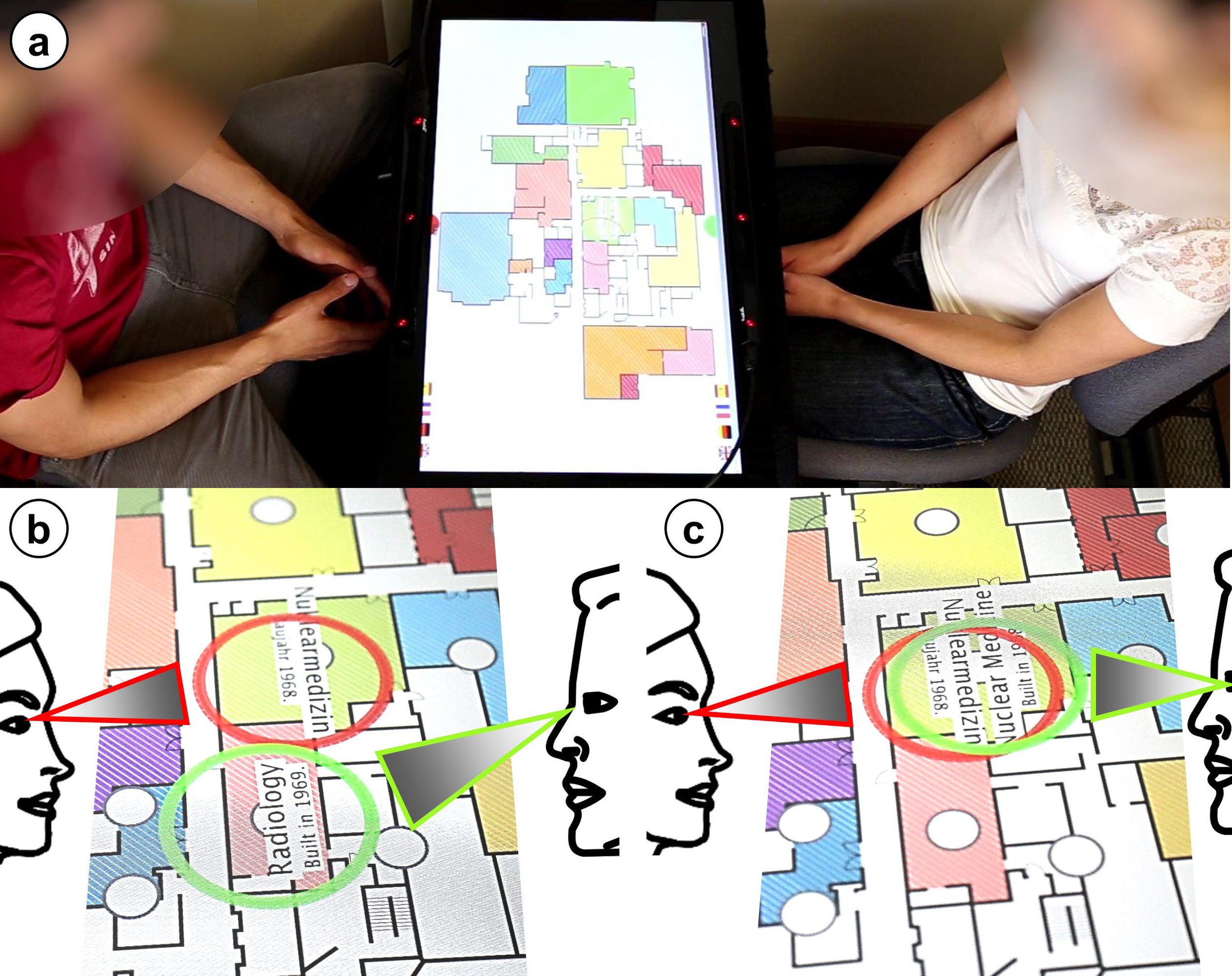

Eye-gaze is a technology for implicit, fast, and hands-free input for a variety of use cases, with the majority of techniques focusing on single-user contexts. In this work, we present an exploration into gaze techniques of users interacting together on the same surface. We explore interaction concepts that exploit two states in an interactive system: 1) users visually attending to the same object in the UI, or 2) users focusing on separate targets. Interfaces can exploit these states with increasing availability of eye-tracking. For example, to dynamically personalise content on the UI to each user, and to provide a merged or compromised view on an object when both users’ gaze are falling upon it. These concepts are explored with a prototype horizontal interface that tracks gaze of two users facing each other. We build three applications that illustrate different mappings of gaze to multi-user support: an indoor map with gaze-highlighted information, an interactive tree-of-life visualisation that dynamically expands on users’ gaze, and a worldmap application with gaze-aware fisheye zooming. We conclude with insights from a public deployment of this system, pointing toward the engaging and seamless ways how eye based input integrates into collaborative interaction.

Ken Pfeuffer, Jason Alexander, and Hans Gellersen. 2021. Multi-user Gaze-based Interaction Techniques on Collaborative Touchscreens. In ACM Symposium on Eye Tracking Research and Applications (ETRA '21 Short Papers). Association for Computing Machinery, New York, NY, USA, Article 26, 1–7.

BibTex

@inproceedings{10.1145/3448018.3458016,

author = {Pfeuffer, Ken and Alexander, Jason and Gellersen, Hans},

title = {Multi-User Gaze-Based Interaction Techniques on Collaborative Touchscreens},

year = {2021},

isbn = {9781450383455},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3448018.3458016},

doi = {10.1145/3448018.3458016},

booktitle = {ACM Symposium on Eye Tracking Research and Applications},

articleno = {26},

numpages = {7},

keywords = {collaboration, shared user interface, gaze input, eye-tracking, multi-user interaction},

location = {Virtual Event, Germany},

series = {ETRA '21 Short Papers}

}